Necessary Always Active

Necessary cookies are required to enable the basic features of this site, such as providing secure log-in or adjusting your consent preferences. These cookies do not store any personally identifiable data.

|

||||||

|

||||||

|

||||||

|

Comparing the pros and cons of deepfake technology is like handing someone your voice and hoping they speak your truth. But what if they do not? Can telling the truth after the lie undo the damage to your identity?

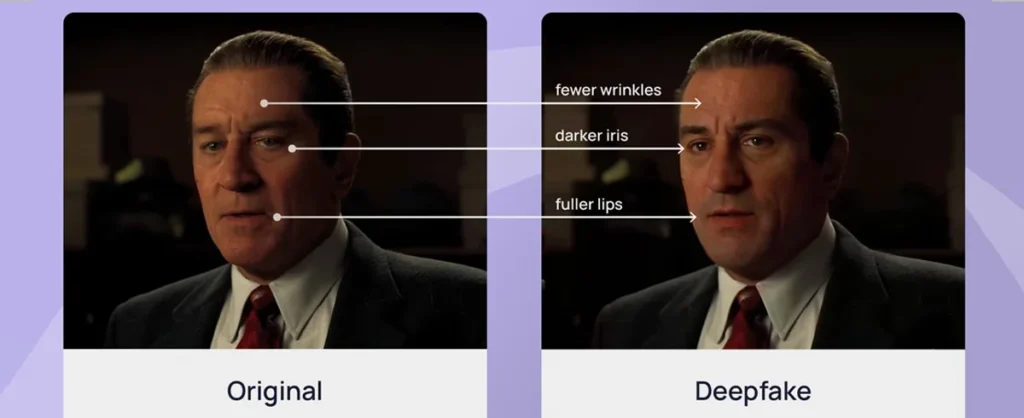

Deepfakes represent a significant breakthrough in artificial intelligence and machine learning, enabling the creation of hyper-realistic versions of human faces, voices, and movements. This means we can now generate convincing images of things people never did and videos of things they never said. And guess what? You may struggle to tell whether an AI-generated face is real.

This article explores how deepfake technology works, its consequences, privacy risks, and how to protect yourself against its growing threats.

Deepfake technology, or “deepfakes,” is a combination of the terms “deep learning” and “fake.” It refers to the use of advanced AI and machine learning algorithms to manipulate media with incredible accuracy.

Another way to explain deepfakes is fake faces, videos, and audio clips with real-world consequences. On the positive side, they can be used for voice-overs, dubbing, or visual effects in entertainment. However, the disadvantages of deepfake technology include digital misinformation, cybersecurity threats, and AI-driven impersonation using synthetic faces and voices.

Deepfake technology is more than basic editing tools like Canva or Adobe Photoshop. It involves advanced video and audio manipulation systems powered by artificial intelligence and machine learning on these technologies:

Following a $2.4 million investment in deepfake detection technology by the US Department of Defense, Kevin Guo, the CEO of Hive AI, describes defending against deepfakes as “the evolution of cyberwarfare”. The consequences of deepfakes can be brutal since the technology supports:

However, making people appear to say or do things they never actually did is not the only problem with deepfake videos. A growing concern is the easy accessibility of open-source deepfake tools like DeepFaceLab, FaceSwap, and Zao. Gone are the days when only experienced programmers could create deepfakes or when only high-end computing systems were required to run these models.

The legal status of deepfake technology is tricky since many image rights and privacy laws were established before deepfakes even existed. However, legislators in developed countries are working on policies to regulate the use. As of today, watching deepfake videos is not illegal in most countries, especially when the content is publicly available.

Legal issues mainly arise based on the intent and purpose behind the creation or distribution of deepfakes. Criminal charges may apply if deepfakes are used to:

– Commit fraud

– Spread digital misinformation

– Manipulate political outcomes

– Create non-consensual explicit content, such as revenge porn

Detecting deepfakes using generative artificial intelligence is like a neural ouroboros. This represents an algorithmic loop where the same technology that creates deception is tasked with exposing it. Generative Adversarial Networks (GANs), composed of a generator and a discriminator, are what researchers describe as a “fake detection arms race”. As the generator improves its creation of fake content, the discriminator is trained to study the logic and get better at detection.

Organizations like Accenture and DARPA are investing in advanced detection systems that incorporate GAN principles to stay ahead of deepfake innovations. The alternative is manual verification by humans with a slightly less than 50% reliability, as found by PNAS (Proceedings of the National Academy of Sciences).

Despite the low chances of humanly detecting possible deceptions, watch for the following signs to protect yourself and sensitive data:

Deepfake technology has useful applications, especially in video games, entertainment, and some educational materials. However, the consequences on the media and how society perceives the truth are dangerous in the following ways:

Can deepfake videos and content be tracked? The answer is Yes! Despite the privacy concerns with content misuse and the rising threat of cyber scams to firms with sensitive data, it is possible to take proactive steps through:

The rising threat of deepfake technology is not just about tricking others, but about the real-world consequences of fake identities. Cybercriminals impersonate superiors to extract confidential information from employees, while celebrities face blackmail, and political systems risk manipulation. However, these dangerous uses of deepfake videos, audio, or text do not take away the potential in the education, entertainment, and gaming fields.

As a society, we must not let malicious individuals tarnish a promising technology in a negative light. The way forward includes updating legislation and raising public awareness on deepfake detection technology to reduce misuse and protect digital trust.

Sign up to receive our newsletter featuring the latest tech trends, in-depth articles, and exclusive insights. Stay ahead of the curve!