Necessary Always Active

Necessary cookies are required to enable the basic features of this site, such as providing secure log-in or adjusting your consent preferences. These cookies do not store any personally identifiable data.

|

||||||

|

||||||

|

||||||

|

The EU AI Act updates by the European Union are the first comprehensive set of regulatory frameworks to monitor the safe, transparent, and ethical use of AI systems. Businesses must understand how these rules affect their operations, especially with ongoing compliance deadlines since the passage of the regulatory Act. This guide explains the major SaaS compliance risks for businesses under EU laws and offers a concise roadmap for staying up-to-date with evolving regulations.

Article 5 of the European AI regulations, which came into force on August 1, 2024, prohibits the use of certain AI systems classified as “unacceptable risk.” Beyond these bans, other sections of the Act classify other AI systems, with progressive deadlines for general-purpose and high-risk categories to meet transparency, safety, and accountability requirements. The summary of the implementation timelines for these EU AI Act updates for businesses and developers is:

Keeping up with the latest EU AI Act updates and established frameworks, such as the General Data Protection Regulation (GDPR), is a challenge for some SaaS providers. A smart strategy to support B2B compliance with these obligations is categorizing them into the following:

Mishandling customer or employee personal data through overcollection, insecure storage, or non-transparent data usage can result in serious penalties under the EU regulatory frameworks. A recent example is Luka Inc.’s €5 million fine in Italy for lack of adequate transparency in its privacy policy and failure to protect minors using the platform.

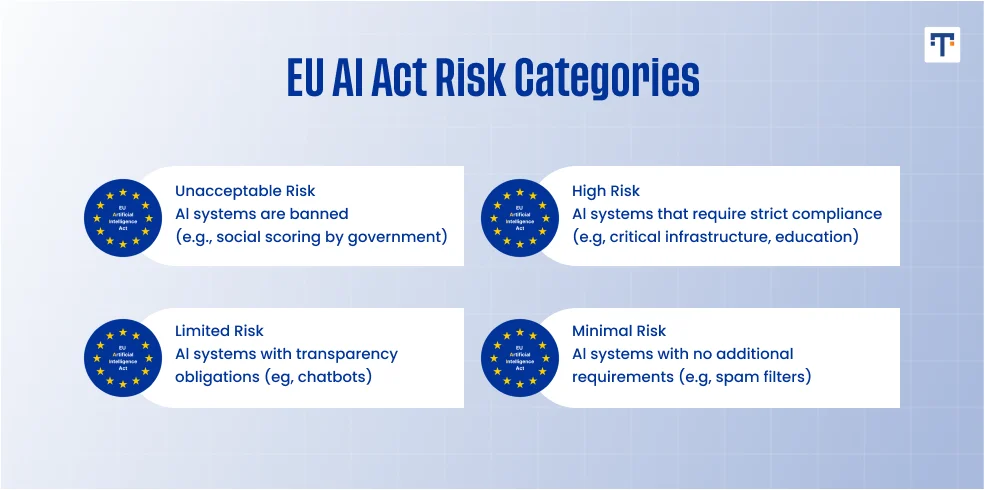

The risk-based classification of AI systems under the EU laws is into minimal risk, specific transparency risk, high risk, and unacceptable risk. SaaS or On-Premises solutions may fall into the high-risk category when used for sensitive applications, such as AI-driven recruitment and CV-screening tools in HR. For these systems, transparency in how the model works and when it is applied is strictly required for compliance.

The unacceptable risk category covers prohibited AI systems, including biometric categorization and social scoring. Failure to correctly classify AI systems exposes B2B enterprises to non-compliance risks regarding the expected documentation, transparency, and explainability requirements.

The EU laws for businesses protect customers’ rights to migrate their data easily through machine-readable formats. Vendor lock-ins of any kind may attract fines if reported. SaaS providers must also ensure transparency by including clear information on how user data is accessed and processed.

Non-compliance can attract penalties, such as the $600 million fine on TikTok in Ireland for failing to clearly inform users about cross-border transfers of personal data to China. Another example is Uber’s €290 million fine in the Netherlands for transferring drivers’ personal information to the United States without sufficient protection measures, such as Standard Contractual Clauses (SCCs).

SaaS compliance risks for B2B enterprises under the latest EU AI Act updates can become more complex when third-party vendors are involved. These may include smaller providers managing outsourced parts of the system, such as datasets, APIs, or AI and machine learning modules.

Under EU regulations, the registered service provider remains accountable for conformity checks, even if non-compliance originates from a third party. For example, Vodafone GmbH in Germany received a €45 million GDPR fine, of which €15 million was directly linked to failures caused by partner agencies.

Full compliance with the European Union AI laws helps businesses avoid regulatory fines and reputational damage. Due to the global influence of the EU AI Act updates, it also offers the following advantages to B2B enterprises:

B2B SaaS compliance with the European Union’s AI regulations is becoming a trust signal in the cloud computing space, where non-compliance can result in heavy penalties. Clients want reassurance that their data is safe, while investors would often require proof of adherence to EU laws for businesses before committing to partnerships.

As the first binding regulation dedicated to artificial intelligence systems, the cross-border ripple effect makes compliance with the latest EU AI Act updates compulsory. Non-EU companies with users within Europe can align early with the regulatory standards to operate smoothly without SaaS compliance risks.

Building trust through effective compliance strategies requires planning ahead of the phased deadlines in 2025 and beyond. Below is a focused B2B enterprise checklist for aligning with the EU’s artificial intelligence and data protection laws:

The EU AI Act enforcement may appear strict, but its clear purpose is to ensure the safe and transparent use of artificial intelligence and personal data. With stricter regulatory laws and upcoming deadlines, SaaS compliance with EU rules has become a trust signal for clients and investors. No business wants to face a multimillion-euro fine that could have been avoided through sound compliance practices. Complying with the European Union regulations is a step-by-step process, and early movers can secure a competitive edge as trusted partners in the evolving global market.

Sign up to receive our newsletter featuring the latest tech trends, in-depth articles, and exclusive insights. Stay ahead of the curve!