Necessary Always Active

Necessary cookies are required to enable the basic features of this site, such as providing secure log-in or adjusting your consent preferences. These cookies do not store any personally identifiable data.

|

||||||

|

||||||

|

||||||

|

Artificial intelligence in 2025 has moved beyond the experimental stage, with deep applications in business operations across Europe. However, its advancement is in a critical phase with powerful European AI regulations beginning to take effect. Alongside the UK AI regulations and other evolving legal frameworks, these laws are reshaping how artificial intelligence systems are designed, deployed, and marketed.

The message is clear for B2B SaaS and technology providers to approach compliance as both a mandatory requirement and an opportunity to earn trust and remain competitive. This article explains what the European AI regulations mean for B2B tech in 2025, compliance concerns, and staying ahead of future laws.

Here is what you should know about the EU AI Act enforcement and how it compares to the UK AI regulations:

The EU AI Act is the first comprehensive law dedicated to artificial intelligence. Ongoing debates about the responsible use of AI led to its creation, with the European Commission proposing the law in April 2021. It came into force on August 1, 2024, after approval by the European Parliament, the Council, and the member states of the EU.

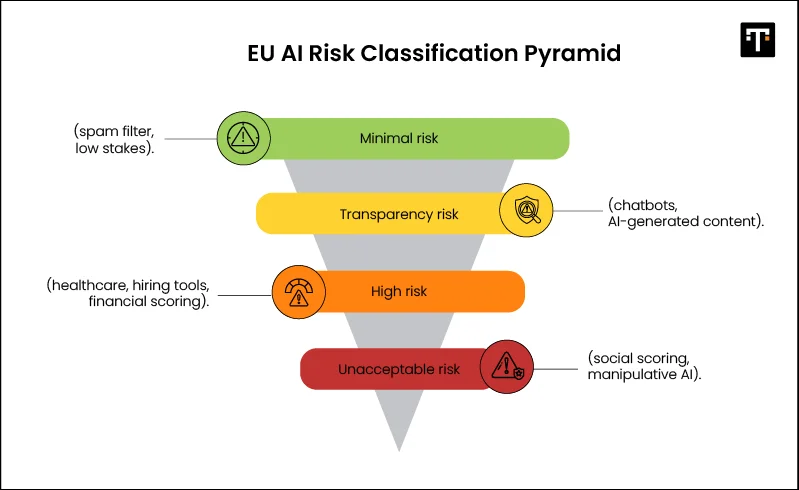

The new AI Act for countries under the European Union uses a uniform risk classification method for artificial intelligence systems:

Unlike the European Union, the United Kingdom follows a decentralized and principle-based framework that relies on sector-based regulators. This means there are no general statutory AI laws as in the EU, but approved agencies oversee AI compliance and regulations within their specific industries. An example is the Institute of Electrical and Electronics Engineers (IEEE) developing its P7000 standards for related AI systems. For more general governance, policies like the General Data Protection Regulation (GDPR) apply to how personal data is collected and processed.

The European Union and the United Kingdom share the same core objectives involving the transparency, accountability, and ethical compliance of their artificial intelligence systems. However, their respective approaches differ, and that influences how far their regulations reach.

For the EU, the unified tier framework for risk levels means enforcement across all member states. The stringent Tech laws on AI compliance also affect any company offering AI services to EU customers, even if they are outside the European Union. This is called the Ripple Effect of the EU AI Act. These regulations can also impact procurement processes when enterprise clients demand proof of AI compliance.

The lack of a formal risk-based system in the UK means enforcement is different and only within the country. It does not carry the same global weight as the EU’s centralized framework.

Here is a breakdown of how the combined policies under the European Union AI Act and the United Kingdom regulatory principles should be interpreted:

The AI regulations being implemented focus on data sources and their usage. The EU demands clear proof of provenance by keeping a detailed record of the origin of every dataset and its processing history. For B2B SaaS compliance, this means improving vendor vetting strategies and ensuring that any AI model used does not process prohibited data types.

Artificial intelligence for SaaS companies in 2025 is no longer just about ensuring AI systems perform intended tasks. Under the EU AI law, end users must understand how artificial intelligence is used, the data it relies on, and its limitations. Regulators also expect clear explanations for high-impact applications, such as credit scoring, recruitment, or healthcare decisions.

The four categories of AI risk classification by the European Union help to clarify compliance expectations. High-risk systems for SaaS or On-Premises solutions include those used in hiring, financial services, or critical infrastructure. Due to the nature of the data processed, these systems face stricter testing, monitoring, and regulatory assessments.

Compliance with European AI regulations and UK AI policies goes beyond data processing. The latest policies require comprehensive record-keeping across the AI system lifecycle. This includes continuous monitoring, logging, and maintaining version histories of models to establish a verifiable audit trail.

Compliance of B2B tech companies with AI regulations in 2025 starts from the design phase and not just during product launch or implementation. Here are some practices that product teams can follow:

1. Transparent model training: Product teams must document training datasets, selection criteria, and decision measures to reduce bias. For example, AI recruitment models for clinical trials should reveal how the demographic data of patients is handled.

2. Extended testing timelines: Allocate defined testing periods, especially for high-risk use cases such as healthcare diagnostics or credit scoring.

3. Post-launch assessments: Compliance with the European AI Act and necessary regulations is a continuous process that must continue after deployment. There should be periodic performance checks for the real-world applications.

Reactions from Big Techs such as Meta and Google at the recent Techarena Conference in Sweden highlight how the latest European regulations slow down AI advancements. However, partnering with machine learning compliance startups like Zango, which recently secured $4.8 million in funding to scale its services, can help bridge the gap. Depending on the perspective of innovation, European AI regulations present both advantages and challenges.

The latest European AI Act and UK regulations are only the beginning. We can expect more policies guiding the responsible application of artificial intelligence. Here are steps for B2B technology and SaaS providers to stay ahead of future AI regulations:

While the EU AI Act reveals compliance struggles for Big Tech’s AI models, the impact goes beyond the penalties to an opportunity to stand out. B2B technology and SaaS providers in 2025 should focus on how it is reshaping the standard for trust, accountability, and safety among customers, partners, and regulators. By integrating ethical principles, transparency, and extended testing timelines, AI product teams can drive responsible innovation that remains sustainable even as new regulations emerge.

Sign up to receive our newsletter featuring the latest tech trends, in-depth articles, and exclusive insights. Stay ahead of the curve!